About Me

Abridged Biography

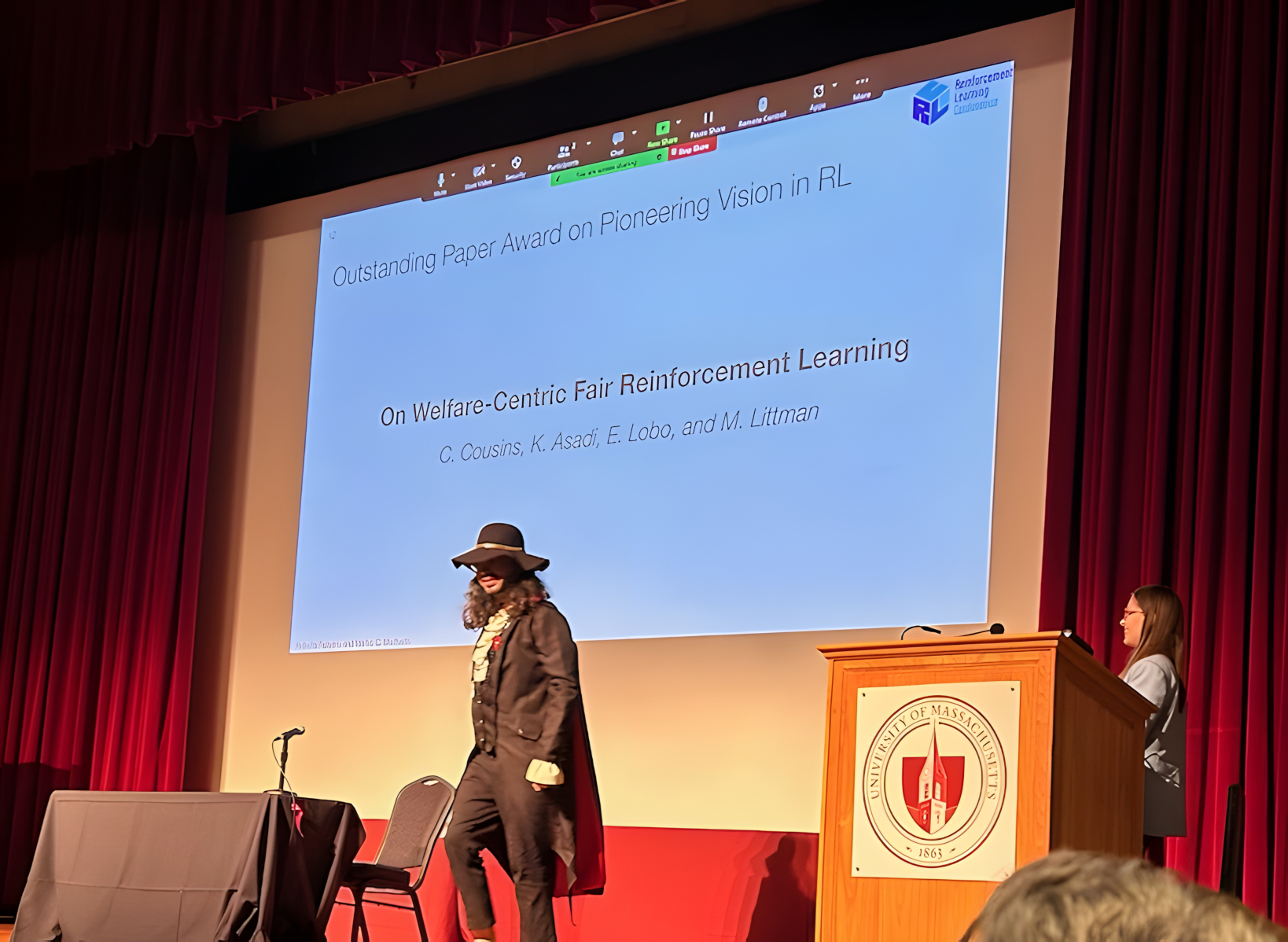

I am Cyrus Cousins, a postdoctoral scholar at the Duke and Carnegie Mellon Universities, with professors Walter Sinnott-Armstrong, Jana Schaich Borg, Vincent Conitzer, and Hoda Heidari, and formerly a visiting assistant professor at Brown University, where I also completed my doctoral studies under the tutelage of Eli Upfal. Before arriving at Brown University, I earned my undergraduate degree in computer science, mathematics, and biology from Tufts University. Known to some as The Count of Monte Carlo, I study all manner of problems involving sampling, randomization, and learning in data science and beyond, with a particular interest in uniform convergence theory and the rigorous treatment of fair machine learning. In particular, my work treats theoretical bounds on generalization error in exotic settings, and applies such bounds to tasks of real-world interest, most notably in data science, empirical game theory, and fair machine learning.

My dissertation Bounds and Applications of Concentration of Measure in Fair Machine Learning and Data Science received the Joukowsky Outstanding Dissertation Prize, and I have been awarded the Dean's Faculty Fellowship visiting assistant professorship at Brown University and the CDS Postdoctoral Fellowship postdoctoral scholarship at the University of Massachusetts Amherst.

My favorite theorem is the Dvoretzky-Kiefer-Wolfowitz Inequality, and my favorite algorithm is simulated annealing.

Research Statements and Application Materials

For posterity and the benefit of future applicants, my older research statements (graduate school, etc.) are also available.

My graduate school research statement is also publicly available (5 pages).

The best (mostly current) overview of my research is given in my thesis summary (4 pages). The piece is a non-mathematical, but still somewhat technical, overview of my dissertation.

The best mathematical overview of my research for general audiences is given in this piece (5 pages). Here the focus is less on applications and implications, and more on intuition for the deeper mathematical connections between my various areas of study. Results are selected for elegance and simplicity, and the piece should be broadly accessible to all audiences with a basic grounding in probability and statistics.

Research Overview

In my research, I strive for a delicate balance between theory and practice. My theory work primarily lies in sample complexity analysis for machine learning, as well as time complexity analysis and probabilistic guarantees for efficient sampling-based approximation algorithms and data science methods [1] [2] [3] [4]. In addition to statistical analysis, much of my work deals with delicate computational questions, like how to optimally characterize and bound the sample-complexity of estimation tasks from data (with applications to oblivious algorithms, which achieve near-optimal performance while requiring limited a priori knowledge), as well as the development of fair-PAC learning, with the accompanying computational and statistical reductions between classes of learnable models.

On the practical side, much of my early work was led by the observation that modern methods in statistical learning theory (e.g., Rademacher averages) often yield vacuous or unsatisfying guarantees, so I strove to understand why, and to show sharper bounds, with particular emphasis on constant factors and performance in the small sample setting. From there, I have worked to apply statistical methods developed for these approaches to myriad practical settings, including statistical data science tasks, the analysis of machine learning, and fairness sensitive machine learning algorithms.

By blurring the line betwixt theory and practice, I have been able to adapt rigorous theoretical guarantees to novel settings. For example, my work on adversarial learning from weak supervision stemmed from a desire to apply statistical learning theory techniques in absentia of sufficient labeled data. Conversely, I have also motivated novel theoretical problems via practical considerations and interdisciplinary analysis; my work in fair machine learning led to the fair-PAC learning formalism, where power-means over per-group losses (rather than averages) are minimized. The motivation to optimize power-means derives purely from the economic theory of cardinal welfare, but the value of this learning concept only becomes apparent when one observes that many of the desirable (computational and statistical) properties of risk minimization translate to power-mean minimization.

Image Controls